AvenueASL: Postsecondary American Sign Language E-Assessment

American Sign Language (ASL) has evolved into the third most widely used language in America preceded only by English and Spanish (Welles, 2004). Currently, more than 500 colleges and universities in the U.S. offer ASL instruction as a world language (Wilcox, 2004). Between the years 1992 and 2006, the rapid increase in demand for postsecondary ASL instruction and linguistic study created a wide range of instructional challenges, including 1) assessing, measuring, and documenting learner progress; and 2) providing formative feedback through an efficient, effective, and technically-valid means (Miller, Hooper, Rose, & Montalto-Rook, 2008).

AvenueASL design overview

The most widespread practice for assessing ASL fluency involves evaluating video recordings of interviews with individual students (Newell & Caccamise, 1992). Prior to 2006, approximately 1,800 ASL students per semester at the University of Minnesota completed mid-semester and final-exams by renting a video camera from the program office, recording a 15- to 20-minute conversation with a fellow student, and submitting the videotape for evaluation (Miller, Hooper, & Rose, 2005). Instructors then reviewed the video (a process often lasting 45 minutes per videotape, due, in large part, to fast-forwarding through incomplete edits, false starts, and ‘redos’ of the exam), assessed the performance, and recorded a single-digit evaluation score with brief textual feedback comments on a note card. Ultimately, these assessment and examination practices proved burdensome for both students and instructors (Hooper, Miller, Rose, & Veletsianos, 2007). Instructors noted that evaluation time averaged three to five weeks per course, delaying learner feedback and limiting valuable reflection opportunities for students. Students noted that ‘meaningless’ scores on a 10-point scale and abbreviated feedback comments provided little to no guidance in improving actual ASL signing expression. Furthermore, the feedback delay made it difficult for instructors to modify classroom instruction based on evaluated deficiencies in learner performance.

To enhance the nature of postsecondary ASL instruction and assessment, we developed AvenueASL, an integrated e-assessment system to capture, evaluate, and manage ASL learner performances. The system enhances the efficiency of the existing assessment process using innovative solutions that are reliable, valid, cost-effective, and efficient. The online environment enables students to capture videos of sign-language assessment tasks and individually build online portfolios for monitoring the progress of their performances over time. These portfolios allow students and instructors to establish learning objectives, document language proficiency, and demonstrate maturing communication abilities, ultimately encouraging students to be more reflective regarding their ASL communication skills (Lupton, 1998). Furthermore, instructors can efficiently provide multiple forms of evaluation feedback (e.g., text, numeric, video, etc.) based on the needs and learning styles of individual students.

Furthermore, the AvenueASL environment utilizes an emergent assessment system known as Curriculum Based Measurements (CBM) (Deno, 1985). CBM were initially designed as a “yard stick” to measure progress in reading, writing, and mathematics. They are easy to implement, cost-effective, and sensitive to student progress, yet valid and reliable (Fuchs, Fuchs, & Hamlett, 1989; Frank & Gerken, 1990). Although CBM have been used in K-12 settings, little research has validated the process for ASL in higher education (Allinder & Eccarius, 1999). To this effect, we have developed standardized CBM assessments to promote continuous progress monitoring in ASL instruction at the postsecondary level (Miller et al., 2008).

These innovations solve an important practical problem and create significant instructional potential for postsecondary ASL education. Together, the methods used to assess student performance and the techniques used to gather, store, and deploy progress data create a system that is technologically sophisticated and pedagogically sound, resulting in improved ASL learning and instructional assessment (Miller et al., 2008).

The AvenueASL e-assessment environment

The AvenueASL e-assessment environment was designed to establish three cohesive layers of interaction: (a) Capture, a platform for students to capture, submit, and archive ASL video performances, (b) Evaluate, a setting for instructors to evaluate and report student performance and feedback, (c) Portfolio, a visualization tool where students can monitor their personal performance and feedback, and (d) Manage, an administration component to coordinate all student demographic and feedback data.

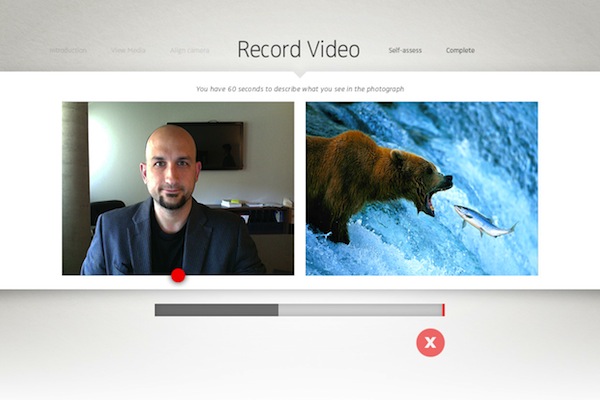

The first layer, the Capture layer, is used independently by students to record ASL performances with a webcam (see Figure 1). Students from each level of the ASL program complete three CBM fluency assessments designed specifically for this environment: Picture Naming, Photo Description, and Story Retell. The capture component eliminates existing financial and organizational obstacles of recording, submitting, and managing several hundred videotapes each semester. Content appropriate to each ASL level is dynamically integrated into the environment and individualized for each student (i.e. content is generated randomly from over 1000 testing videos, photographs, and illustrations to maintain testing security and objectivity).

Figure 1. Student recording screen with the student’s performance video on the left and the task media stimulus video on the right.

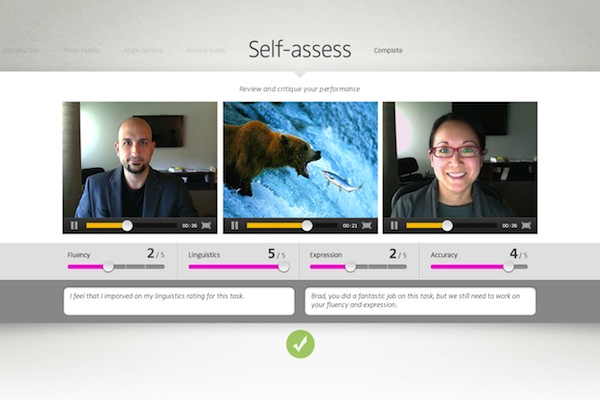

The second layer, the Evaluate layer (see Figure 2), is a networked assessment instrument used by instructors to evaluate student performance videos recorded in the Capture layer. In addition to submitting evaluation scores based on the four ASL CBMs (e.g., fluency, linguistics, expression, accuracy, etc.), instructors have the ability to modify feedback based on individual student needs by using various feedback modalities (i.e. text, numeric, and recorded video). In other words, instructors can record video evaluations, in addition to traditional numerical and textual feedback, for students who require further assistance with their expressive and communicative skills. This feature provides students with a feedback package covering all aspects of their performances, both quantitative and qualitative. Furthermore, the Evaluate layer creates opportunities for instructors to monitor and adapt to students’ evolving needs. For example, by gaining immediate access to students’ performances (rather than the present three to five week delay), instructors can identify current learning problems and modify personal teaching practices in the classroom.

Figure 2. Student and instructor feedback screen displaying the task video, student performance video, student self-assessment data, and instructor feedback video, in addition to numeric/textual evaluation data.

Assessment data are often used to assign grades rather than to improve performance. However, the third layer, the Portfolio, is an environment where students view their evaluations and feedback, monitor personal progress as they advance through the ASL course-sequence, and reflect upon and set personal goals. The Portfolio layer is an environment where personal histories are developed in a setting that encourages students to compare and contrast personal and model performance. In addition, integrated performance and feedback visualizations were designed to allow students and instructors to visually display language proficiency gains and demonstrate maturing communication abilities. Students can view their progress over time, compare and contrast with other students in their class or across their level of ASL study, and click on tests to view the individualized feedback package for each performance, providing on-demand reflection and self-assessment. Further, students can compare their self-assessment scores with instructor feedback to enhance their understanding of the expected CBM-based outcomes. The goal of these feedback packages and performance portfolios was to encourage students to be more reflective regarding their ASL communication skills (Lupton, 1998; Miller et al., 2008).

AvenueASL current and future integration

Currently, more than 25 instructors and 2,000+ students at the University of Minnesota use the environment each year, in addition to thousands of students and instructors from universities and institutions nationwide. During the 2011-2012 academic year, students completed in excess of 450,000 performance tasks in the environment. At present, more than 3.5 million video performances have been captured and evaluated by students and instructors in the environment, amounting to what is believed to be the largest collection of ASL e-assessment performances ever captured in an online environment.

Based on the widespread success of the AvenueASL environment, we focused our recent development efforts on placing even more power in the teachers’ hands in order to promote integration in other world language domains. To this effect, the current version of AvenueASL (v3.0) now incorporates the new Test Builder component, which allows teachers to create fully customizable testing experiences, including the ability to construct personalized evaluation matrices and record or upload unique testing stimulus media tailored to the daily needs of the classroom. This feature has created the opportunity for Avenue to be integrated successfully into any K-20+ world language instructional environment. In the fall of 2012 we will be piloting the new environment with more than 100,000 secondary and postsecondary Spanish, French, German, Chinese, and ESL students and instructors.

AvenueDHH: Reading, Writing, and Language Development for K-8 DHH Learners

A century of research supports the negative impacts of hearing loss on the development of English language skills (i.e. reading and writing) between hearing children and children who are deaf or hard-of-hearing (DHH) (Marschark & Harris, 1996; Rose, McAnally & Quigley, 2004; Marschark, Lang & Albertini, 2002). Progress-monitoring systems currently used by teachers of DHH students are typically unreliable, complex, and time consuming (Esterbrooks & Huston, 2007), provide little functional information for feedback and instructional decision making, and do little to promote learning (Ewoldt, 1987). Likewise, standardized tools designed for the general population are insensitive to DHH students’ progress, lack validity and reliability (Kelly, 2005; Luckner & Streckler, 2007; Odom et. al., 2005), and are difficult to interpret (Yoshinago-Itano, Snyder, & Mayberry, 1996). Furthermore, available paper-pencil measures (e.g., grade level MAZE passages) are insensitive to small increments of growth exhibited by beginning DHH readers (Rose, McAnally & Quigley, 2004).

AvenueDHH design overview

Research suggests DHH teachers have difficulty using the results of existing progress-monitoring measures to determine if their instruction, assessments, and feedback lead to improved student progress in reading and writing (Yoshinago-Itano et al., 1996). Therefore, teachers of DHH children typically resort to subjective impressions and anecdotal information, often resulting in feedback and assessment information that is unused, misused, or misunderstood. This is the challenge we are addressing in the development of AvenueDHH, a universally-accessible e-assessment environment for DHH teachers, students, and parents.

The AvenueDHH e-assessment environment

The goal of AvenueDHH is to transform the assessment, feedback, and progress-monitoring strategies in reading, writing, and language development for DHH students in grades K-8. The system is comprised of seven categories of assessment tasks (i.e. picture naming, photo description, word slash tests, MAZE passages, signed/oral reading, story retell, and story completion) and supports the digital capture of multiple communication modalities (i.e. oral, signed, and Cued Speech) and languages (i.e. ASL and English) common to children with hearing loss in the U.S. The three primary assessment tasks that have been developed to date are MAZE, Word Slash, and Real-Time Reading. These tests are described in the following sections.

Maze Test. Cloze tests, which have been used for decades to monitor literacy performance, are created by removing every seventh word from a text passage. Students complete the tests by writing into blank spaces the words they think were deleted. The MAZE Test in AvenueDHH is a modified Cloze procedure. The student selects the appropriate word from three available choices to move it into to the blank space in the passage (see Figure 3). Each blank space contains three words (i.e. the missing word and two distractors) that appear under the space; distractors are from a different part of speech than the correct word (e.g., if the missing word were a verb, the distractors must not be verbs).

Figure 3. A student completing an automatically graded MAZE passage in the AvenueDHH software. Level goals are represented by various characters at the bottom of the screen.

We experimented with the look and feel of the MAZE passages to maintain visual appeal, to address programming issues, and to create a positive user experience. The blank spaces had to be styled to make them large enough to contain any word in the database, small enough to avoid visual imbalance, and visually appealing. To maintain fluid interactions and smooth transitions the process of selecting/ deselecting words was carefully choreographed and the opacity of each word was animated to its final state.

Slash Test. Slash tests are text passages, displayed in upper case, in which the spaces between words are removed to create a continuous flow of characters. In print, students draw vertical lines where they believe word breaks should be. On the computer, the student clicks between words to insert (or remove) word breaks between the characters. Throughout the Slash Test design process we focused considerably on the experience of a young child interacting with the text. Characters to the right of a word break animate horizontally to emphasize the selection, and a vertical line appears between the words. Rolling the mouse over successive characters introduces a ‘temporary’ vertical line—a visual cue suggesting how a word break would appear if selected. By using different lengths, widths, and colors to draw vertical lines between characters and carefully timing each animation, we were able to increase usability by reducing inaccuracy and frustration, and create a fundamentally different aesthetic experience from what is possible with paper and pencil.

Real Time Reading. The real-time reading test (RTR) measures sign-language or oral text fluency. The student is presented with a passage on the screen and prompted to read the text within a set time frame (i.e. 1 to 3 minutes depending on passage length and difficulty). The student reads the text orally, in sign language, or both. Similar to the capture layer in AvenueASL, the student’s performance is recorded by a webcam then stored online for teacher assessment. Separate interfaces are used for spoken and signed performances. Assessing oral reading involves tracking the number of words read correctly and incorrectly. Sign language is assessed using a rubric with descriptive qualitative measures for fluency, linguistics, accuracy, and expression.

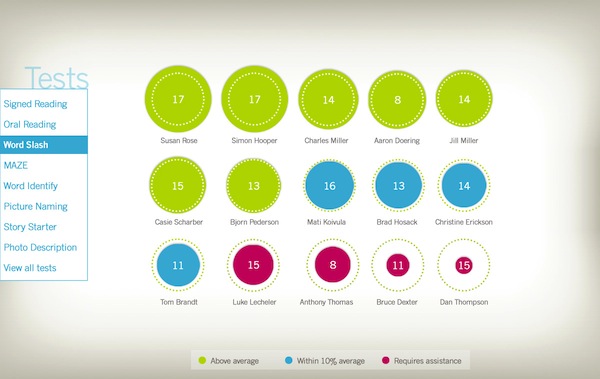

Finally, a unique design challenge in the development of AvenueDHH was how to present performance data for multiple students on multiple tasks to teachers in an easy-to-understand manner. Figure 4 illustrates a potential solution to this challenge that we are prototyping with current instructors. Here, teachers are able quickly see how students are performing on each task. Performances can be placed in three general categories that correspond to the color of the circles: successful performance (green), performance that requires intervention (red), and performance that is within 10 % of average (blue). The final category is particularly useful for teachers who aim to modify the difficulty of subsequent tests in order to ensure a student is neither struggling nor insufficiently challenged. These types of static and interactive visualizations are currently being developed and integrated into both the teacher and student sections of the AvenueDHH environment. In addition to authentic classroom use of the system, these features will serve as a foundation for our future implementation research.

Figure 4. Teacher visualization of current student progress and goal proximity on the Word Slash test.

AvenueDHH current and future integration

Currently, more than 1,000 DHH educators and students across several states are using the AvenueDHH environment in their classrooms. We recently received U.S. Department of Education Stepping Stones Phase II funding for a 3-year period (spring 2012-spring 2015) to scale-up state-level evaluation and nationwide dissemination. Phase II involves research on the effectiveness of AvenueDHH in diverse K-8 DHH classrooms throughout the nation, as well as a design-based research exploration of the evolving theoretical foundations, design principles, and integration models for the e-assessment environment.

As we move forward our implementation research design will focus on: a) validating the assessment measures and tasks employed throughout the system, b) monitoring the growth patterns of students in the academic areas of reading and written expression, and c) analyzing teachers’, students’, and parents’ use of data generated by the system. We will employ rigorous field-based research to determine the effectiveness of the AvenueDHH system on the literacy performance of students who are DHH and students with learning difficulties. In addition, we will continue to use a design-based research methodology integrated with classical quantitative studies to modify and improve our design using data regarding the relative effectiveness, usability, and performance of the system for teachers, students, and parents.

To complement our future research, we are redesigning the entire system from the ground up using several new technologies. The current versions of AvenueDHH were developed primarily using the Adobe Flash and Flex authoring environments, with the online environment itself running in the Flash Player of the browser. However, many of the teachers we worked with over the past few years have experienced problems with getting the latest Flash Player installed in their classrooms, or have noted their technology staff does not allow use of the webcams in a Flash-based environment (for security and privacy reasons). Therefore, we have decided to make use of modern web technologies and redevelop the system using a combination of HTML5, jQuery, and AJAX. These technologies are both device and browser agnostic, which means the new version of AvenueDHH will be able to run on all platforms regardless of Flash, aligning with many of the security protocols these schools implement. We are also developing custom iOS AvenueDHH Apps for the real-time and story-retell reading tests. Students will be able to use their camera-enabled iPad or iPod Touch to read and record a real-time video of their performance, which will then be submitted to their teacher and accessible either through the AvenueDHH online environment or the AvenueDHH iOS App.

Ultimately, we believe that future design, integration, and evaluation of the AvenueDHH e-assessment environment will 1) increase language, reading, and writing proficiency for DHH students in grades K-8; 2) achieve widespread integration of a flexible technology-based system that requires only minimal external maintenance and is scalable to diverse institutions; and 3) improve the nature of research and instructional decision-making by teachers in the DHH reading, writing, and language development community. Furthermore, we anticipate the theoretical, pedagogical, and technological evolution of the AvenueDHH environment will have significant implications for contemporary assessment methodologies in secondary DHH language development, as well as other K-12 world language development and assessment.

Designing Forward for E-Assessment

Through considering the AvenueASL and AvenueDHH e-assessment environments at a macro-level, we understand that the key to their success is the recursive and informative roles of pedagogy, design, feedback, learner experience, and research in each environment’s design. From each environment’s conception, to its first iterations, to its final design, theoretical and practical perspectives on pedagogy, feedback, and research were omnipresent and continually infused in each stage of the development process – nothing was an add-on. We believe that the recursive loop of these elements throughout the entire design process is at the heart of developing effective learning environments.

For more information on using AvenueASL in your world language classroom today, please visit http://lt.umn.edu/ave. For more information on the AvenueDHH project, please follow http://lt.umn.edu for future details.